大语言模型集成工具 LangChain

LangChain 介绍

- 介绍:

- 通过可组合性使用大型语言模型构建应用程序

- 【背景】大型语言模型 (LLM) 正在成为一种变革性技术,使开发人员能够构建他们以前无法构建的应用程序,但是单独使用这些 LLM 往往不足以创建一个真正强大的应用程序,当可以将它们与其他计算或知识来源相结合时,就有真的价值了。LangChain 旨在协助开发这些类型的应用程序

- 使用文档:https://langchain.readthedocs.io/en/latest/index.html

- 代码:https://github.com/hwchase17/langchain

安装介绍

- 库安装

pip install langchain

pip install openai

- OPENAI KEY 获取:

- 淘宝上花几块钱购买一个是最方便的途径

- 或到 https://openai.com/blog/openai-api/ 网站申请账号,申请周期有可能会比较长

- 添加环境变量

- 在终端运行:

export OPENAI_API_KEY="..." - 或在 python 脚本中添加:

import os; os.environ["OPENAI_API_KEY"] = "..."

- 在终端运行:

LangChain 应用(基于0.0.64 版本测试)

获取 LLM 的预测 (QA 任务)

- 获取 LLM 的预测是最直接的应用方式,测试样例如下

text = "What would be a good company name a company that makes colorful socks?"

print(llm(text)) # 返回 Socktastic!

简单数学问题:

from langchain.llms import OpenAI # 导入 LLM wrapper

llm = OpenAI(temperature=0.9) # 大的 temperature 会让输出有更多的随机性

text = "what is the results of 5+6?"

print(llm(text)) # 返回 11

text = "what is the results of 55+66?"

print(llm(text)) # 返回 121

text = "what is the results of 55555+66666?"

print(llm(text)) # 返回 122221

text = "what is the results of 512311+89749878?"

print(llm(text)) # 返回 89,876,189,终于错了...

另一个例子,这里返回的是同义词,如果要返回同音词则需要修改输入的 prompt(另外一个解决方式是基于以下章节中的 Memory 模式):

text = "what word is similar to good?"

print(llm(text)) # 返回 Excellent

text = "what word is homophone of good?"

print(llm(text)) # 返回 Goo

输入 prompts 模板设置

- 在上面根据公司生产的产品生成公司名字的应用中,一种让用户输入更简单的方式是仅让客户输入公司生产的产品即可,不需要输入整个语句,这需要对 prompts 设置模板:

from langchain.prompts import PromptTemplate

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

print(prompt.format(product="colorful socks")) # 返回 What is a good name for a company that makes colorful socks?

text = prompt.format(product="colorful socks")

print(llm(text)) # 返回 Socktastic!

text = prompt.format(product="chocolates")

print(llm(text)) # 返回 ChocoDelightz!

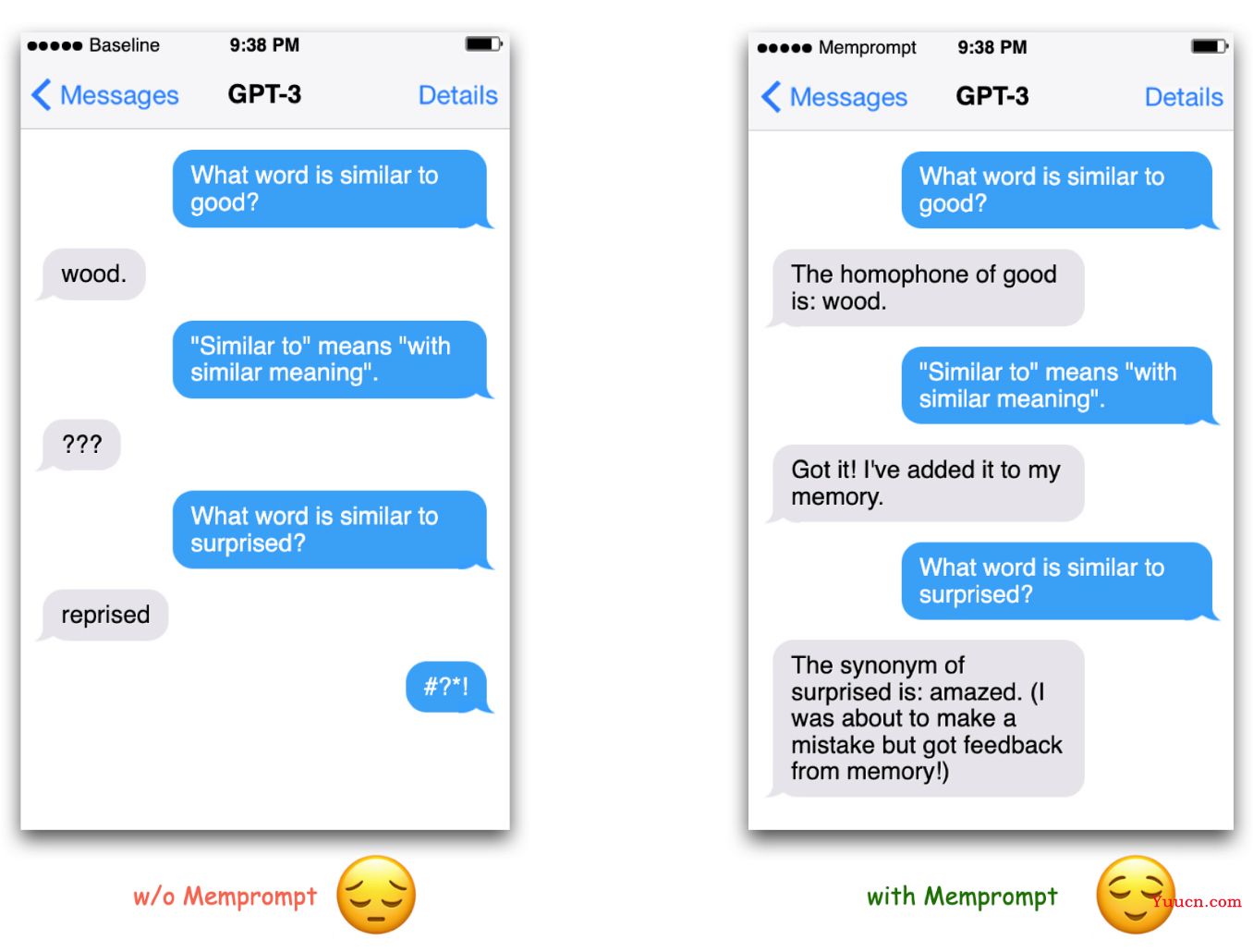

Memory 功能: 在 LLM 交互中记录交互的历史状态,并基于历史状态修正模型预测

-

该实现基于论文: MemPrompt

- 即当模型出错了之后,用户可以反馈模型错误的地方,然后这些反馈会被添加到 memory 中,以后遇到类似问题时模型会提前找到用户的反馈,从而避免犯同样的错

- 即当模型出错了之后,用户可以反馈模型错误的地方,然后这些反馈会被添加到 memory 中,以后遇到类似问题时模型会提前找到用户的反馈,从而避免犯同样的错

-

对话任务中的 ConversationChain 示例(ConversationBufferMemory 模式),verbose=True 会输出对话任务中的 prompt,可以看到之前聊天会作为短期 memory 加在 prompt 中,从而让模型能有短时间的记忆能力:

from langchain import OpenAI, ConversationChain

llm = OpenAI(temperature=0)

conversation = ConversationChain(llm=llm, verbose=True)

conversation.predict(input="Hi there!") # 返回如下

#> Entering new ConversationChain chain...

#Prompt after formatting:

#The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific #details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

#Current conversation:

#Human: Hi there!

#AI:

#> Finished chain.

# Out[53]: " Hi there! It's nice to meet you. How can I help you today?"

conversation.predict(input="I'm doing well! Just having a conversation with an AI.") # 返回如下

#Prompt after formatting:

#The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific #details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

#Current conversation:

#Human: Hi there!

#AI: Hi there! It's nice to meet you. How can I help you today?

#Human: I'm doing well! Just having a conversation with an AI.

#AI:

#> Finished chain.

#Out[54]: " That's great! It's always nice to have a conversation with someone new. What would you like to talk about?"

- LangChain 这里看起来没有直接在 QA 任务中集成 memprompt,不过可以基于对话任务来测试之前 QA 任务中出错的问题,可以看到基于 memprompt 确实可以利用用户的反馈来修正模型预测结果:

conversation.predict(input="what word is similar to good?") # 返回 ' Synonyms for "good" include excellent, great, fine, and superb.'

conversation.predict(input="similar to means with similar pronunciation") # 返回 ' Ah, I see. Synonyms for "good" with similar pronunciation include wood, hood, and should.'

这里的实现看起来和 memprompt 非常类似,每个问题不会直接回答答案,而是回答 understating+answer,从而让用户可以基于对 understating 的理解来判断模型反馈是否符合用户的预期,而不用直接判断 answer 的正确性

-

对话任务中的其他几种 memory 添加模式

- ConversationSummaryMemory:与 ConversationBufferMemory 类似,不过之前的对话会被总结为一个 summary 加在 prompt 中

- ConversationBufferWindowMemory:在 ConversationBufferMemory 模式基础上加个滑窗,即只加入最近几次对话的记录,避免 memory buffer 过大

- ConversationSummaryBufferMemory:结合以上两种方式,将之前的对话总结为一个 summary 加在 prompt 中,同时会设置一个 prompt 最大词汇数量,超过该词汇数量的时候会抛弃更早的对话来使 prompt 的词汇数量符合要求

-

更高级的 memory 使用方式

- Adding Memory to a Multi-Input Chain:主要用于 QA 任务,用一个语料库作为 memory,对于输入的 prompt,找到与该 prompt 类似的信息加在 prompt 中,从而能利用上语料库中的信息

- Adding Memory to an Agent:对于具备 google 搜索功能的 Agent,可以将对话历史记录到 memory 中,从而能让 Agent 对某些与之前历史结合的对话理解更准确

总结

- LangChain 基于 OPENAI 的 GPT3 等大语言模型设计一系列便于集成到实际应用中的接口,降低了在实际场景中部署大语言模型的难度