活动地址:CSDN21天学习挑战赛

学完手写识别和服装分类,想稍微停下来消化一下新学的东西,也总结一下,今天就从keras的model.summary()输出开始吧!

1、model.summary()是什么

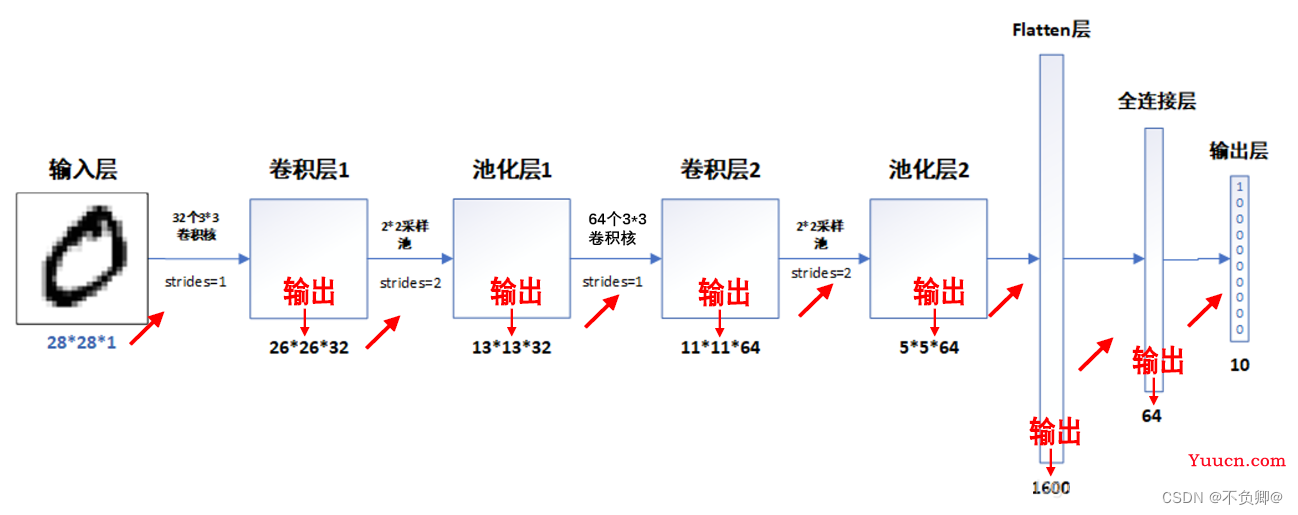

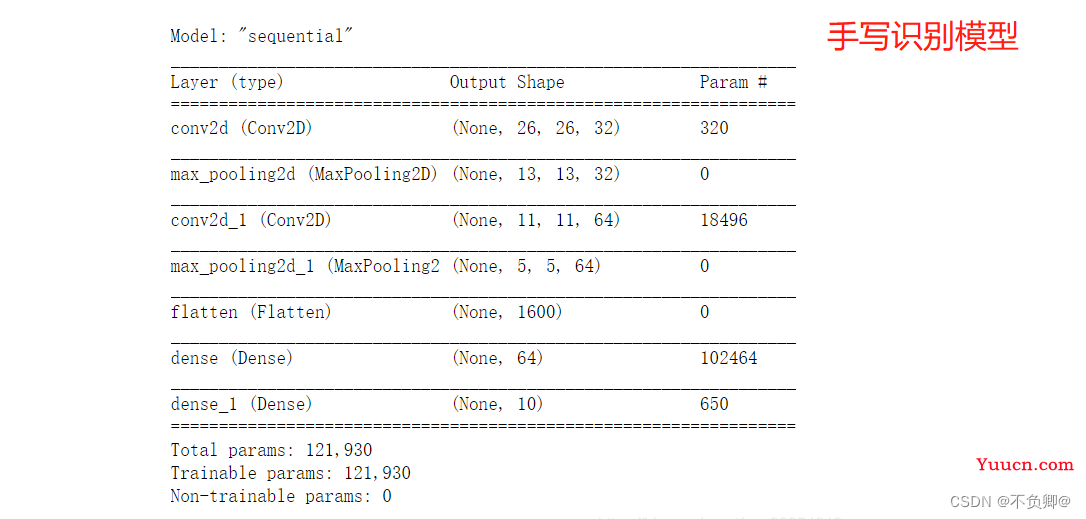

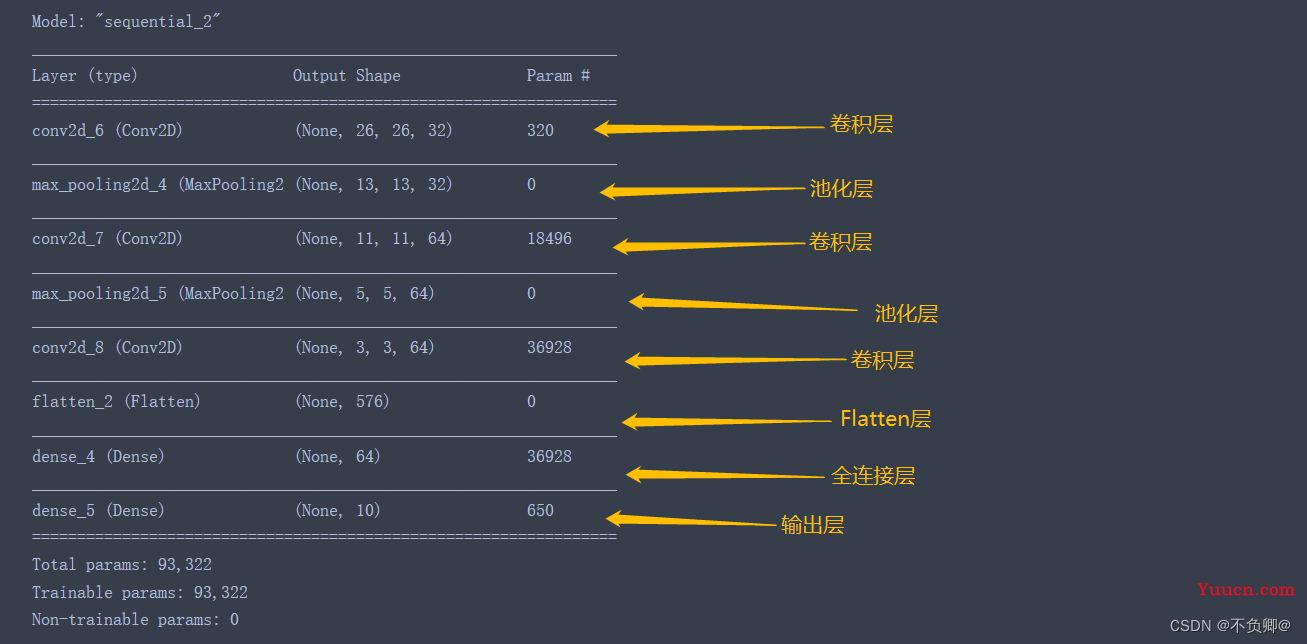

构建深度学习模型,我们会通过model.summary()输出模型各层的参数状况,已我们刚刚学过的模型为例:

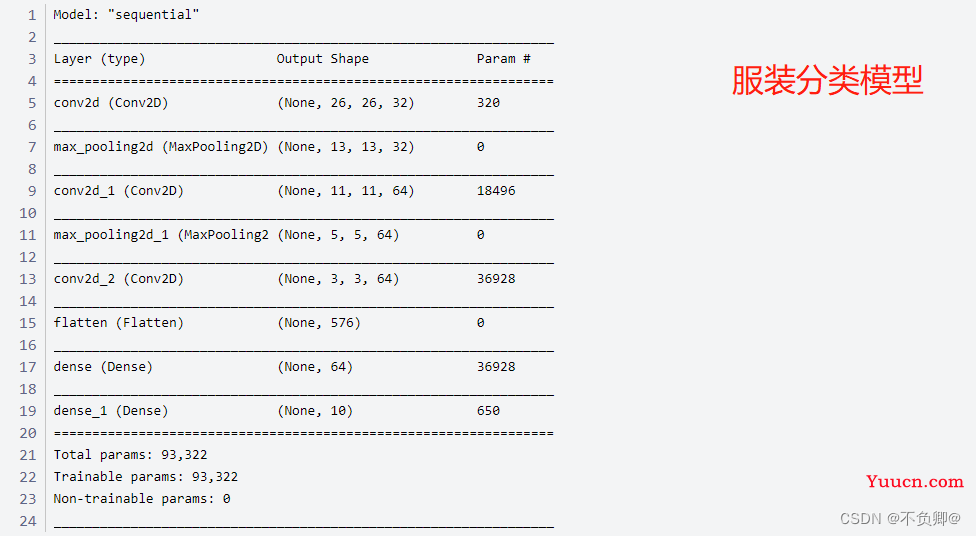

这里可以看出,model.summary()打印出的内容,是和我们构建模型的层级关系是一样,服装分类模型为例:

#构建模型代码

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), #卷积层1,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层3,卷积核3*3

layers.Flatten(), #Flatten层,连接卷积层与全连接层

layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

layers.Dense(10) #输出层,输出预期结果

])

2、model.summary()输出含义

仍以服装分类模型为例:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_6 (Conv2D) (None, 26, 26, 32) 320

#创建: layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), #卷积层1,卷积核3*3

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 13, 13, 32) 0

#创建:layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

_________________________________________________________________

conv2d_7 (Conv2D) (None, 11, 11, 64) 18496

#创建:layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 5, 5, 64) 0

#创建:layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

_________________________________________________________________

conv2d_8 (Conv2D) (None, 3, 3, 64) 36928

#创建:layers.Conv2D(64, (3, 3), activation='relu'), #卷积层3,卷积核3*3

_________________________________________________________________

flatten_2 (Flatten) (None, 576) 0

#创建:layers.Flatten(), #Flatten层,连接卷积层与全连接层

_________________________________________________________________

dense_4 (Dense) (None, 64) 36928

#创建:layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

_________________________________________________________________

dense_5 (Dense) (None, 10) 650

#创建:layers.Dense(10) #输出层,输出预期结果

=================================================================

Total params: 93,322

Trainable params: 93,322

Non-trainable params: 0

_________________________________________________________________

-

Param:该层输入参数个数, 那这个数字是怎么来的呢?

a、卷积层参数个数的计算公式:(卷积核长度*卷积核宽度*通道数+1)*卷积核个数

例:

第一个卷积层:(3*3*1+1)*32 = 320

第二个卷积层:(3*3*32+1)*64 = 18496

第三个卷积层:(3*3*64+1)*64 = 36928b、全连接层参数个数的计算公式:

(输入数据维度+1)* 神经元个数

例:

输出层之前的全连接层:(64+1)*10=650

这里之所以要加1,因为每个神经元都有一个偏置(Bias)。 -

Output Shape :该层输出数据形状

-

Total params: 模型参数总数,

每层参数累加 -

Trainable params: 模型可训练参数

-

Non-trainable params:模型不可训练参数

3、理解模型流程形状

通过model.summary(),我们再看这个图,就清楚多了